Context engineering is the latest buzzword to break through in Artificial Intelligence. Its emergence is linked to the rapid evolution of LLM applications - from the early AI chatbots that came out in late 2022 to the sophisticated autonomous agents of today. Here I'll explain exactly what context engineering is and why it's become such a hot topic.

When tools like ChatGPT first appeared, there was a big focus on mastering the art of effective prompting - or prompt engineering. Everyone now knows that prompts are the instructions we give to AI assistants to answer a question or generate an output. As well as making them clear and precise, we can improve the chances of the AI providing an accurate response on the first go if we include some additional context within our prompts (this could just be some helpful background or examples of the kind of thing we want).

For example, instead of asking ChatGPT to "Summarize this report," you could add context such as: "Summarize this 20-page quarterly sales report into a one-page executive summary for the CFO. Focus only on revenue trends, regional performance, and risks flagged by the sales team. Keep the language concise and business-like." This added contextual background can guide the AI to produce something genuinely useful on the first attempt.

Why context is growing in importance

Fast forward to today, and AI has evolved dramatically, with AI agents that can reason and call on hundreds of tools to solve complex, multi-step problems. Providing the right context becomes even more essential for getting the LLMs to do what you want.

The phrase 'context engineering' gained significant traction through Andrej Karpathy, a prominent voice in the world of AI. A former Senior AI director at Tesla and one of the founding team at OpenAI, he's also credited with introducing the term 'vibe coding'. When he shares his thoughts, the AI community pays attention.

In Karpathy's words, "People associate prompts with short task descriptions you'd give an LLM in your day-to-day use." But he goes on to argue that in a world where we're now using sophisticated "industrial strength" LLM apps, context is playing a much bigger role than the simple text descriptions or instructions we include within prompts. Context engineering is the term he applies to what he describes as "the delicate art and science of filling the context window with just the right information for the next step."

Sources of context

LLM Agents or apps can receive context from a variety of sources, including the developer of the application, the user, previous interactions, tool calls, or other external data.

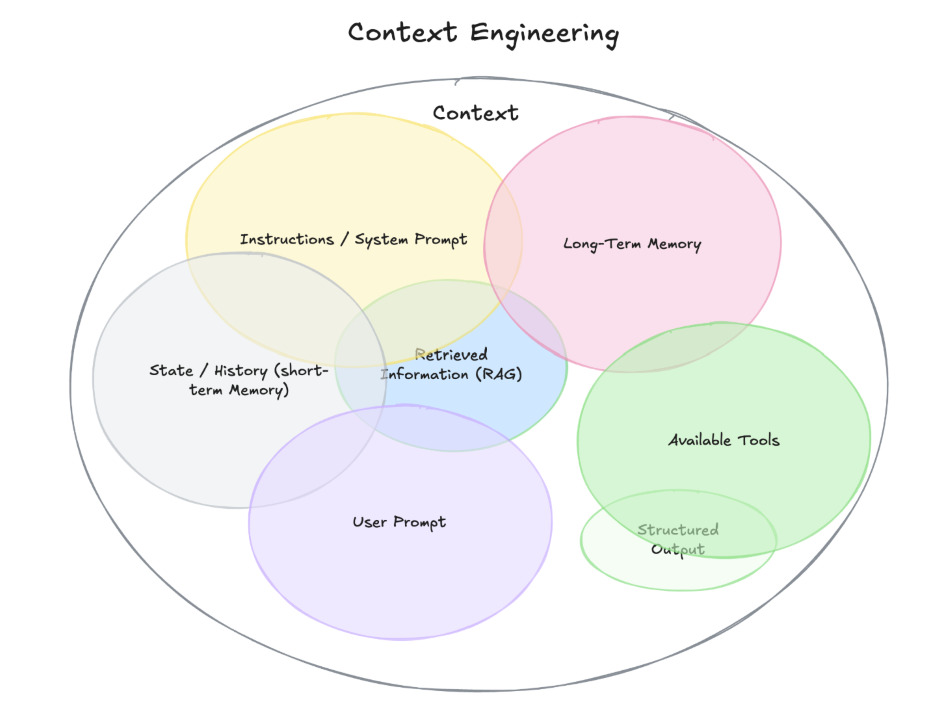

In the diagram below, Phil Schmid, a Senior AI Relations Engineer at Google DeepMind, builds on this idea by illustrating the various sources of context - which in his view is "everything the model sees before it generates a response."

This includes the contextual information in the system prompt (the guidelines typically provided by the developer to tell the AI chatbot or agent how it should operate, including restrictions and guard rails) and in the short-term and long-term memory (based on recent and previous user interactions); information obtained via tools accessible to the agent, as well as relevant external data that an LLM can be configured to capture using retrieval-augmented generation (RAG).

Structured outputs in the diagram refers to context that tells an LLM to generate responses within a pre-determined structured format. So, if you are asking for information about people's political affiliations, in the UK you might give it a JSON field that includes Labour, Conservatives and the country's other main political parties.

Agents struggle without context

The key point is that without the appropriate context, an LLM application or agent is more likely to fail in delivering what the user has requested. Imagine you ask an AI agent to book a holiday on dates that work for you and three of your friends. Without providing contextual information on the type of holidays everyone in the party prefers and access to a tool to view all of your calendars (to check dates when everyone is available), the agent will struggle.

Too much context is bad

Importantly, according to Karpathy, effective context engineering is about providing "just the right information." He explains that "too little or of the wrong form and the LLM doesn't have the right context for optimal performance."

Too much context (including information that's not relevant or helpful to the task) forces the AI to work harder, consuming more power and resources and taking longer to get to the end result.

Rather than overloading the context window with everything you have, feeding only the most relevant insights enables the system to give you exactly what you need as quickly and efficiently as possible.

When providing context in the form of documents or reports, for example, filter out irrelevant 'noise' like licensing information and sections that are unrelated to the task. Many of the hallucinations that LLMs are accused of stem from the confusion caused by overloading them with irrelevant information. Cut this and hallucinations become less likely.

Context is dynamic

The other important point to note: context engineering is dynamic. In Schmid's words, context is "the output of the system that runs before the main LLM call" and is tailored in the moment to the task at hand.

For example, imagine an AI assistant that helps a customer service team respond to customer questions. The "context" it requires to respond to one customer's question might be the company's most recent pricing data, while for another question it could be a library of technical documents. The assistant has to dynamically pull in the most relevant context at the moment depending on the question being asked.

Conclusion

Prompt engineering taught us how to give clear instructions to AI assistants. Context engineering takes the next step, emphasizing the need to provide the most relevant information, in the moment, enabling AI agents to reason and act effectively and perform complex tasks. If it is done correctly, context engineering enables AI systems to work faster, more efficiently, and with greater accuracy and reliability - while reducing the risk of hallucinations.

Mastering context engineering is likely to be one key to maximizing the potential of AI as well move from chatbots to a world of autonomous agents and complex AI applications.

This blog was first published on the IBM Community.