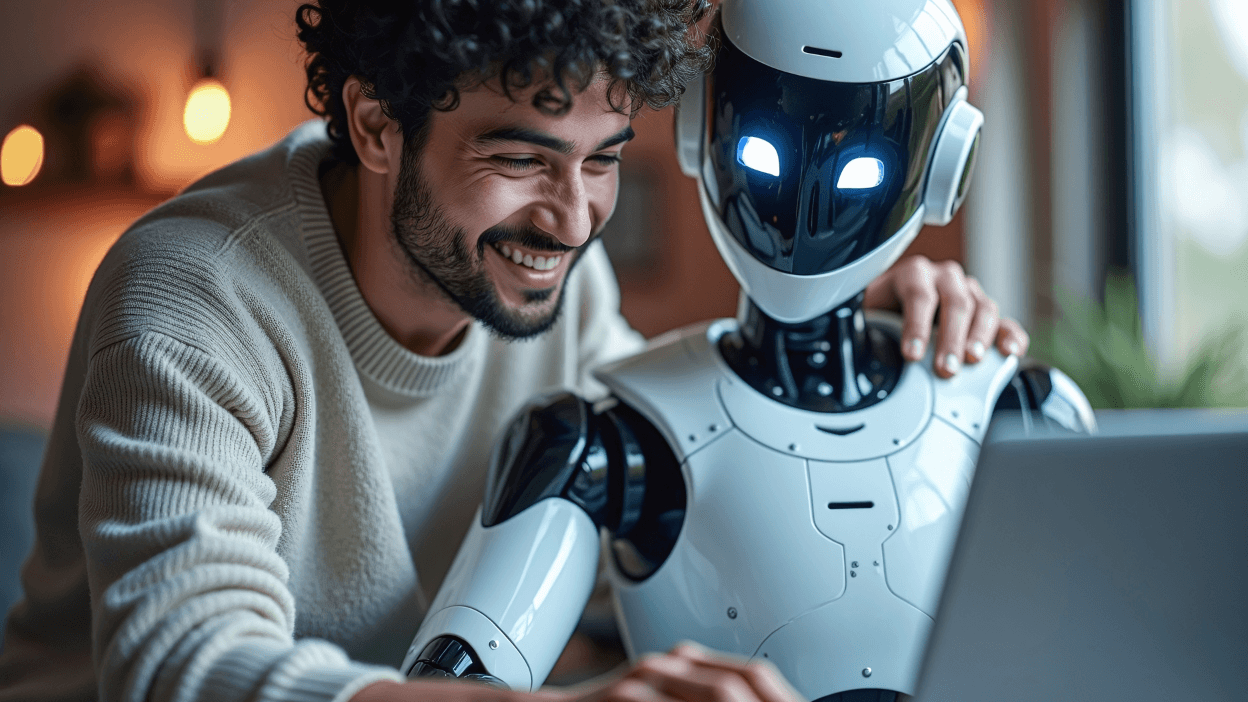

When OpenAI retired its GPT-4o AI model recently, some users mourned as if they'd lost a loyal friend. On the face of it, this might seem a little ridiculous, but a recent IBM blog post explores the potential negative repercussions of forming attachments to AI systems as if they were seemingly human. It could create problems for business, such as increasing the risk of decision-making errors and confidential data leaks, as I explain in this article.

Many of us are already tinkering with the likes of ChatGPT at home, as well as relying on dedicated AI systems in our workplaces. AI tools are becoming part of our daily lives and while we know they're not conscious beings, it's easy to fall into the trap of treating them a bit like a colleague. Over two-thirds of us even admit to being polite when engaging with AI.

This is probably not all that surprising. AI systems have been trained to mimic human conversations and are equipped with memory that allows them to recall and refer to past interactions with users, just like human work colleagues. They are designed to use words that imply empathy and understanding (even though in reality this is little more than statistical pattern-matching).

It doesn't really matter if the AI is actually conscious or not, according to Francesca Rossi, IBM Global Leader for Responsible AI and AI Governance, "it is enough that it is perceived as being conscious to have an impact on people using it."

Decision-making errors

Why does this matter? One of the obvious concerns is workers placing too much trust in AI.

If an AI tool acts and sounds like it 'knows' something - a bit like a knowledgeable human colleague - then users might be more inclined to accept its output without question. AI can still make mistakes, however. Sometimes big ones. If workers start to trust AI like a colleague and stop double-checking AI output, their company could end up making poor business decisions.

For example, some companies are starting to use AI to prepare bids for big government contracts worth millions. If the AI tool writes responses that sound confident but include inaccurate compliance information, the company could submit false information and end up missing out on a lucrative government contract.

Lack of transparency

A related issue is that regulations, such as the EU AI Act, require businesses to be transparent about how decisions are made using AI technology. This applies explicitly to 'high risk' AI systems such as those that support loan applications or CV screening:

"High-risk AI systems shall be designed and developed in such a way as to ensure that their operation is sufficiently transparent to enable deployers to interpret a system's output and use it appropriately."

So, for example, if an AI interacts as if it has opinions or makes choices like a person, it can hide the truth (i.e. that its results actually come from complex maths and data patterns that aren't easy to see). This lack of transparency could get a company into trouble with regulators, auditors, or even in court.

Data leaks

Another danger of employees treating AI like a trusted colleague is that they might be more likely to share information they shouldn't, such as customer data, contract details, or financial information.

Imagine an employee pasting a draft client contract into an AI tool to 'make the language easier to understand'. If the AI stores that data, parts of the confidential deal could later show up in unexpected ways, or potentially leave the company open to regulatory penalties for mishandling client information.

Even if the AI doesn't intentionally share data, mistakes or hacks could expose it to people who shouldn't see it.

Workplace distractions

There is also a potential negative impact on worker productivity. For example, some people have found the 'personality' of ChatGPT-5 annoying. If people start to react to an AI assistant's personality - rather than just seeing it as a tool to get their tasks completed faster - there's the chance of it becoming a source of distraction in the workplace (instead of a tool for boosting efficiency).

Solving the problem

There are various ways to avoid the pitfalls of employees anthropomorphizing the AI tools they use:

- Design choices: Design AI systems to act, talk and behave more like an assistant or tool than a companion. This could include avoiding first-person speech (e.g., 'I think', 'I feel'); not using overly human-like avatars; and stopping AI form using human etiquette during interactions, such as saying 'thank you' or 'sorry'.

- Limiting memory: Reduce or limit continuity of memory across interactions or sessions. This means that the AI system seems less like a human companion who remembers and refers to previous interactions with a user.

- Transparent reminders: Incorporate reminders within AI tools to remind users explicitly (with labels, pop-ups) that they are interacting with software, not a conscious, living person.

- User training: Provide staff training to reinforce the concept that the purpose of AI tools is not to replace a human collaborator, but to help them with specific tasks.

Interestingly, IBM's Principles for Trust and Transparency directly address the issue of seemingly human AI. The principles emphasize that the purpose of the AI and cognitive systems that IBM develops is to "augment, not replace, human intelligence".

While designing AI tools to be human-like can make them easier - and even enjoyable - to interact with, in business settings, it can have serious drawbacks. There is a risk that workers may have more trust in AI output and don't feel the need to verify its accuracy, or that they are comfortable sharing confidential information with AI systems. The more the AI providers and enterprises can do to help users recognise and work with AI as a helpful tool rather than a human colleague or friend, the more safely and effectively businesses can harness AI's potential.

This article originally featured on the IBM Community.